With HPE Dl380 Gen10 servers, you have to access the Intelligent Provisioning tool during the startup (F10),

Then you select customize the RAID, using the smart array controller tool (By default, RAID 1 created for two disks), Know more

Selecting the right RAID type for your IT infrastructure

The RAID setting that you select is based upon the following:

• The fault tolerance required

• The write performance required

• The amount of usable capacity that you need

Selecting RAID for fault tolerance

If your IT environment requires a high level of fault tolerance, select a RAID level that is optimized for fault tolerance.

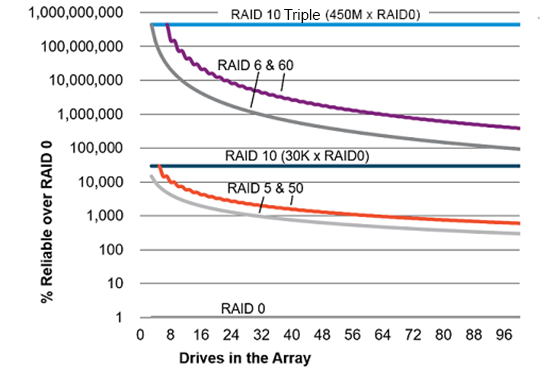

This chart shows the relationship between the RAID level fault tolerance and the size of the storage array. The chart

includes RAID 0, 5, 50, 10, 6, 60, and RAID 10 Triple. It also shows the percent reliability in increments between 1 and one

billion and the storage array drive increments between 0 and 96.

This chart assumes that two parity groups are used for RAID 50 and RAID 60.

This chart shows that:

Features 12

• RAID 10 is 30,000 times more reliable than RAID 0.

• RAID 10 Triple is 450,000,000 times more reliable than RAID 0.

• The fault tolerance of RAID 5, 50, 6, and 60 decreases as the array size increases.

Selecting RAID for write performance

If your environment requires high write performance, select a RAID type that is optimized for write performance

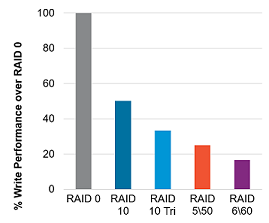

The chart below shows how RAID 10, 10 Triple, 5, 50, 6, and 60 compare to the percent write performance of RAID 0.

The data in the chart assumes that the performance is drive limited and that drive write performance is the same as drive

read performance.

Consider the following points:

• RAID 5, 50, 6, and 60 performance assumes parity initialization has completed.

• Write performance decreases as fault tolerance improves due to extra I/O.

• Read performance is generally the same for all RAID levels except for smaller RAID 5\6 arrays.

Features 13

The table below shows the Disk I/O for every host write:

Disk I/O for every host write

| RAID type | Disk I/O for every host write |

| RAID 0 | 1 |

| RAID 10 | 2 |

| RAID 10 Triple | 3 |

| RAID 5 | 4 |

| RAID 6 | 5 |

Selecting RAID for usable capacity

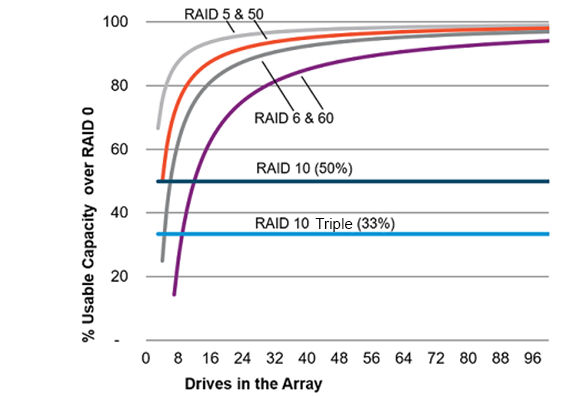

If your environment requires a high usable capacity, select a RAID type that is optimized for usable capacity. The chart in

this section demonstrates the relationship between the number of drives in the array and the percent usable capacity over

the capacity for RAID 0.

Consider the following points when selecting the RAID type:

• Usable capacity decreases as fault tolerance improves due to an increase in parity data.

• The usable capacity for RAID 10 and RAID 10 Triple remains flat with larger arrays.

• The usable capacity for RAID 5, 50, 6, and 60 increases with larger arrays.

• RAID 50 and RAID 60 assumes two parity groups.

Note the minimum drive requirements for the RAID types, as shown in the table below.

| RAID type | Minimum number of drives |

| RAID 0 | 1 |

| RAID 10 | 2 |

| RAID 10 Triple | 3 |

| RAID 5 | 3 |

| RAID 6 | 4 |

| RAID 50 6 | |

| RAID 60 8 |

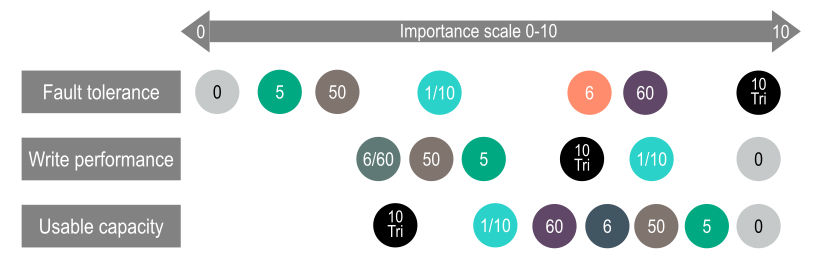

Selecting RAID for the storage solution

The chart in this section shows the relevance of the RAID type to the requirements of your environment. Depending on

your requirements, you should optimize the RAID types as follows:

• RAID 10 Triple: Optimize for fault tolerance and write performance.

• RAID 6/60: Optimize for fault tolerance and usable capacity.

• RAID 1/10: Optimize for write performance.

• RAID 5/50: Optimize for usable capacity.

Mixed mode (RAID and HBA simultaneously)

Any drive that is not a member of a logical drive or assigned as a spare is presented to the operating system. This mode

occurs by default without any user intervention and cannot be disabled. Logical drives are also presented to the operating

system.

Controllers that support mixed mode (P-class and E-class) can reduce the number of controllers in the system and

efficiently use drive bays within a backplane. For example, a solution that needs all the drives presented as HBA (except a

two-drive mirror for boot support) can be accomplished with a single controller attached to a single backplane.

Drive LED Method HBA RAID

Locate LED (Solid Blue) SSACLI Yes Yes

Virtual SCSi Enclosure

Services (SES)

Yes No

Drive Failure LED (Solid

Amber)

Auto Yes Yes

Virtual SES Yes No

Predictive Drive Failure LED

(Blinking Amber)

Auto No Yes

Virtual SES Yes No

Reporting See Diagnostic Tools Yes Yes

Virtual SES is a computer protocol hosted by the Smart Array driver. It is used with disk storage devices/enclosures to

report and access drive bay locations, and control LEDs. The Virtual SES SCSI devices appear as a normal enclosure and

support host tools such as the SG_UTIL Linux package, which contains the SG_SES tool.

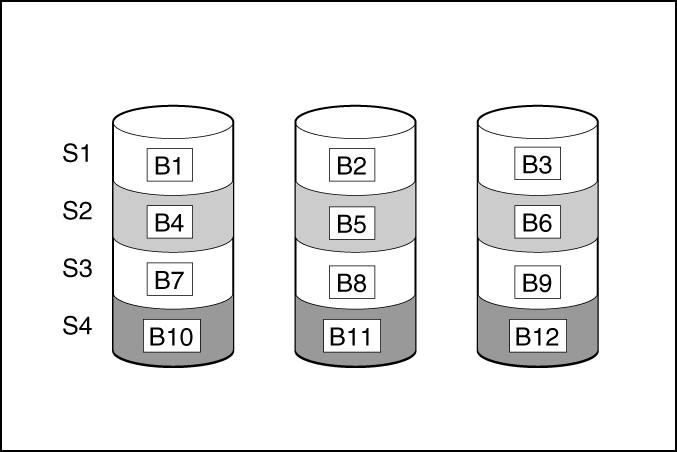

Striping

RAID 0

A RAID 0 configuration provides data striping, but there is no protection against data loss when a drive fails. However, it is

useful for rapid storage of large amounts of noncritical data (for printing or image editing, for example) or when cost is the

most important consideration. The minimum number of drives required is one.

This method has the following benefits:

• It is useful when performance and low cost are more important than data protection.

• It has the highest write performance of all RAID methods.

• It has the lowest cost per unit of stored data of all RAID methods.

• It uses the entire drive capacity to store data (none allocated for fault tolerance).

Mirroring

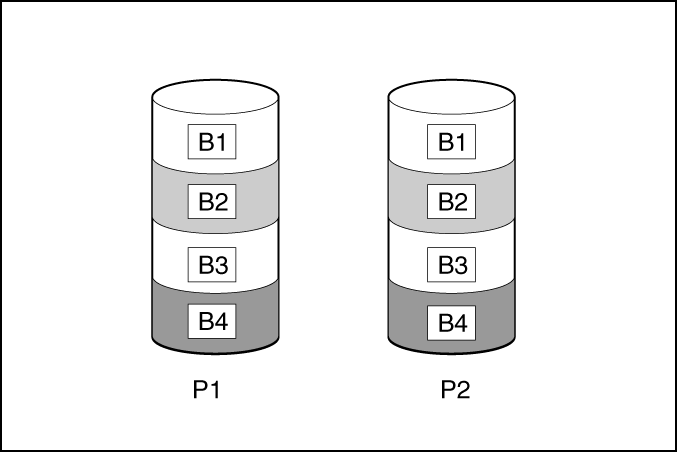

RAID 1 and RAID 1+0 (RAID 10)

In RAID 1 and RAID 1+0 (RAID 10) configurations, data is duplicated to a second drive. The usable capacity is C x (n / 2)

where C is the drive capacity with n drives in the array. A minimum of two drives is required.

When the array contains only two physical drives, the fault-tolerance method is known as RAID 1.

When the array has more than two physical drives, drives are mirrored in pairs, and the fault-tolerance method is known

as RAID 1+0 or RAID 10. If a physical drive fails, the remaining drive in the mirrored pair can still provide all the necessary

data. Several drives in the array can fail without incurring data loss, as long as no two failed drives belong to the same

mirrored pair. The total drive count must increment by 2 drives. A minimum of four drives is required.

This method has the following benefits:

• It is useful when high performance and data protection are more important than usable capacity.

• This method has the highest write performance of any fault-tolerant configuration.

• No data is lost when a drive fails, as long as no failed drive is mirrored to another failed drive.

• Up to half of the physical drives in the array can fail.

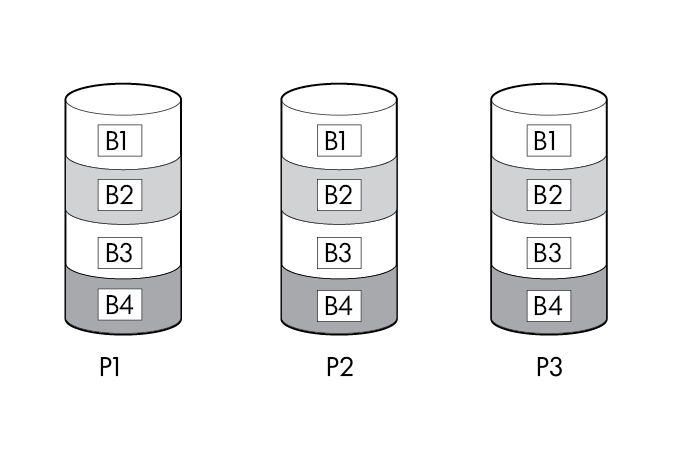

RAID 1 (Triple) and RAID 10 (Triple)

In RAID 1 Triple and RAID 10 Triple configurations, data is duplicated to two additional drives. The usable capacity is C x

(n / 3) where C is the drive capacity with n drives in the array. A minimum of 3 drives is required.

When the array contains only three physical drives, the fault-tolerance method is known as RAID 1 Triple.

When the array has more than six physical drives, drives are mirrored in trios, and the fault-tolerance method is known as

RAID 10 Triple. If a physical drive fails, the remaining two drives in the mirrored trio can still provide all the necessary

data. Several drives in the array can fail without incurring data loss, as long as no three failed drives belong to the same

mirrored trio. The total drive count must increment by 3 drives.

This method has the following benefits:

• It is useful when high performance and data protection are more important than usable capacity.

• This method has the highest read performance of any configuration due to load balancing.

• This method has the highest data protection of any configuration.

• No data is lost when two drives fail, as long as no two failed drives are mirrored to another failed drive.

• Up to two-thirds of the physical drives in the array can fail.

Read load balancing

In each mirrored pair or trio, Smart Array balances read requests between drives based upon individual drive load.

This method has the benefit of enabling higher read performance and lower read latency.

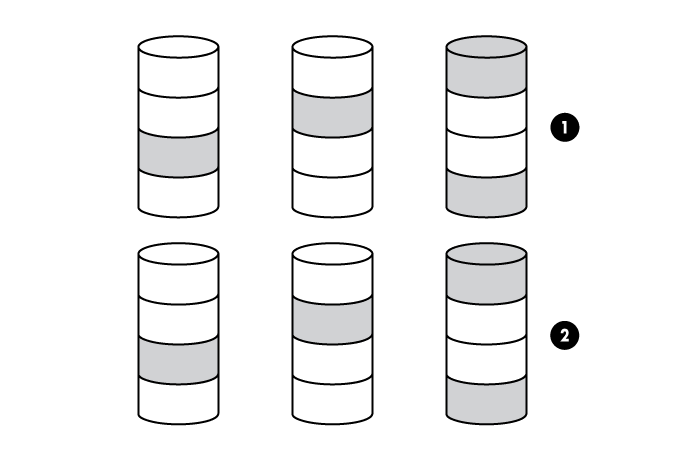

Mirror splitting and recombining

The split mirrored array feature splits any mirrored array (RAID 1, 10, 1 Triple, or 10 Triple) into multiple RAID 0 logical

drives containing identical drive data.

The following options are available after creating a split mirror backup:

• Re-mirror the array and preserve the existing data. Discard the contents of the backup array.

• Re-mirror the array and roll back to the contents of the backup array. Discard existing data.

• Activate the backup array.

The re-mirrored array combines two arrays that consist of one or more RAID 0 logical drives into one array consisting of

RAID 1 or RAID 1+0 logical drives.

For controllers that support RAID 1 Triple and RAID 10 Triple, this task can be used to combine:

• one array with RAID 1 logical drives and one array with RAID 0 logical drives into one array with RAID 1 Triple logical

drives

• one array with RAID 1+0 logical drives and one array with RAID 0 logical drives into one array with RAID 10 Triple

logical drives

This method allows you to clone drives and create temporary backups.

Parity

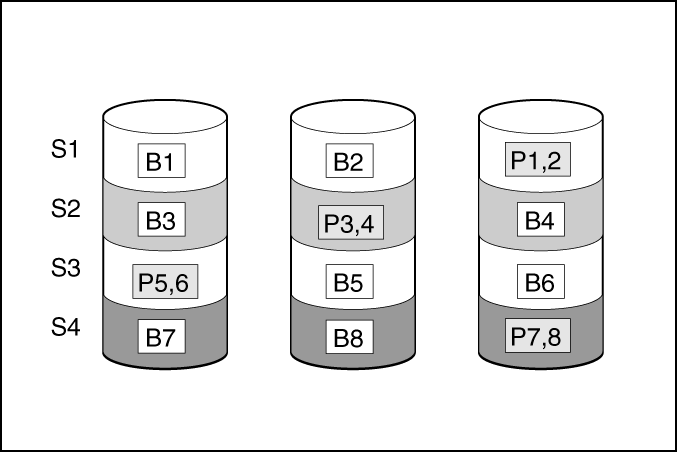

RAID 5

RAID 5 protects data using parity (denoted by Px,y in the figure). Parity data is calculated by summing (XOR) the data

from each drive within the stripe. The strips of parity data are distributed evenly over every physical drive within the

logical drive. When a physical drive fails, data that was on the failed drive can be recovered from the remaining parity data

and user data on the other drives in the array. The usable capacity is C x (n – 1) where C is the drive capacity with n drives

in the array. A minimum of three drives is required.

This method has the following benefits:

• It is useful when usable capacity, write performance, and data protection are equally important.

• It has the highest usable capacity of any fault-tolerant configuration.

• Data is not lost if one physical drive fails.

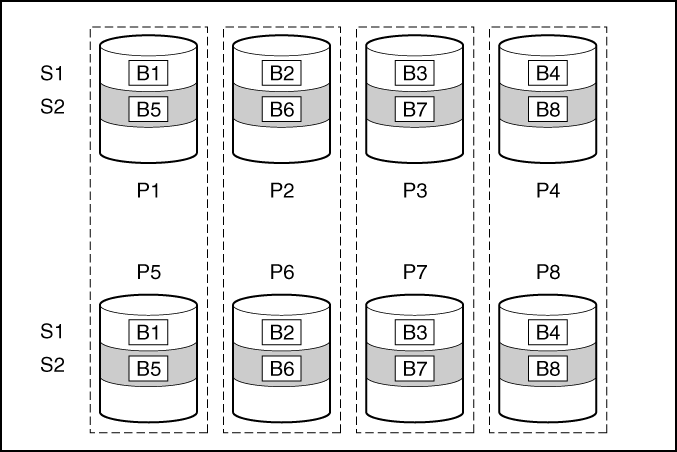

RAID 50

RAID 50 is a nested RAID method in which the constituent drives are organized into several identical RAID 5 logical drive

sets (parity groups). The smallest possible RAID 50 configuration has six drives organized into two parity groups of three

drives each.

For any given number of drives, data loss is least likely to occur when the drives are arranged into the configuration that

has the largest possible number of parity groups. For example, four parity groups of three drives are more secure than

three parity groups of four drives. However, less data can be stored on the array with the larger number of parity groups.

All data is lost if a second drive fails in the same parity group before data from the first failed drive has finished rebuilding.

A greater percentage of array capacity is used to store redundant or parity data than with non-nested RAID methods

(RAID 5, for example). A minimum of six drives is required.

This method has the following benefits:

• Higher performance than for RAID 5, especially during writes.

• Better fault tolerance than either RAID 0 or RAID 5.

• Up to n physical drives can fail (where n is the number of parity groups) without loss of data, as long as the failed

drives are in different parity groups.

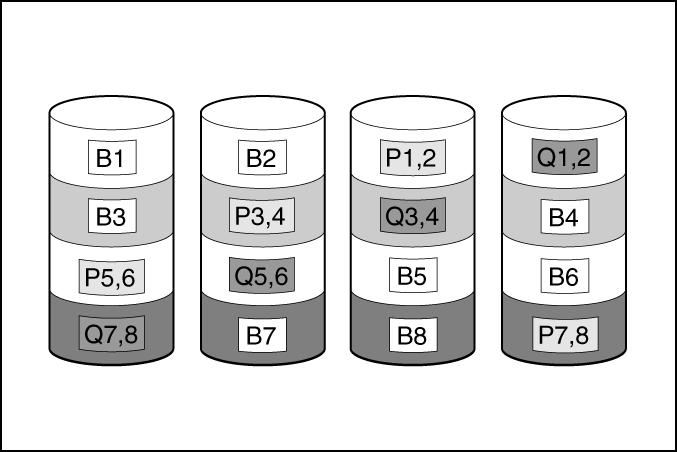

RAID 6

RAID 6 protects data using double parity. With RAID 6, two different sets of parity data are used (denoted by Px,y and

Qx,y in the figure), allowing data to still be preserved if two drives fail. Each set of parity data uses a capacity equivalent

to that of one of the constituent drives. The usable capacity is C x (n – 2) where C is the drive capacity with n drives in the

array.

A minimum of 4 drives is required.

This method is most useful when data loss is unacceptable but cost is also an important factor. The probability that data

loss will occur when an array is configured with RAID 6 (Advanced Data Guarding (ADG)) is less than it would be if it were

configured with RAID 5.

This method has the following benefits:

• It is useful when data protection and usable capacity are more important than write performance.

• It allows any two drives to fail without loss of data.

RAID 60

RAID 60 is a nested RAID method in which the constituent drives are organized into several identical RAID 6 logical drive

sets (parity groups). The smallest possible RAID 60 configuration has eight drives organized into two parity groups of

four drives each.

Features 21

For any given number of hard drives, data loss is least likely to occur when the drives are arranged into the configuration

that has the largest possible number of parity groups. For example, five parity groups of four drives are more secure than

four parity groups of five drives. However, less data can be stored on the array with the larger number of parity groups.

The number of physical drives must be exactly divisible by the number of parity groups. Therefore, the number of parity

groups that you can specify is restricted by the number of physical drives. The maximum number of parity groups

possible for a particular number of physical drives is the total number of drives divided by the minimum number of drives

necessary for that RAID level (three for RAID 50, 4 for RAID 60).

A minimum of 8 drives is required.

All data is lost if a third drive in a parity group fails before one of the other failed drives in the parity group has finished

rebuilding. A greater percentage of array capacity is used to store redundant or parity data than with non-nested RAID

methods.

This method has the following benefits:

• Higher performance than for RAID 6, especially during writes.

• Better fault tolerance than RAID 0, 5, 50, or 6.

• Up to 2n physical drives can fail (where n is the number of parity groups) without loss of data, as long as no more than

two failed drives are in the same parity group.

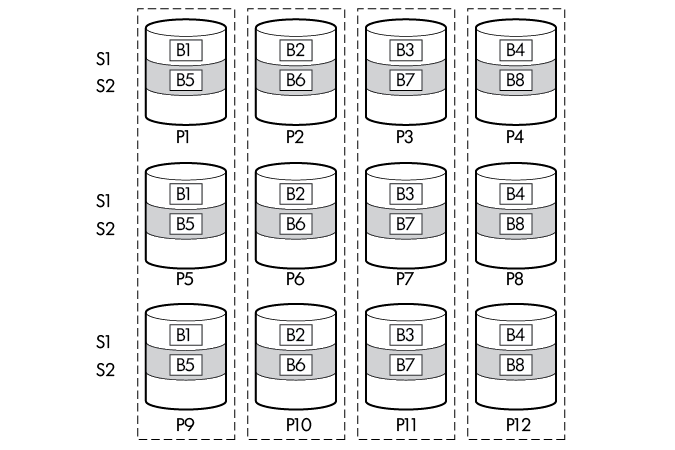

Parity groups

When you create a RAID 50 or RAID 60 configuration, you must also set the number of parity groups.

You can use any integer value greater than 1 for this setting, with the restriction that the total number of physical drives

in the array must be exactly divisible by the number of parity groups.

The maximum number of parity groups possible for a particular number of physical drives is the total number of drives

divided by the minimum number of drives necessary for that RAID level (three for RAID 50, four for RAID 60).

This feature has the following benefits:

• It supports RAID 50 and RAID 60.

• A higher number of parity groups increases fault tolerance.

Background parity initialization

RAID levels that use parity (RAID 5, RAID 6, RAID 50, and RAID 60) require that the parity blocks be initialized to valid

values. Valid parity data is required to enable enhanced data protection through background controller surface scan

analysis and higher write performance (backed out write). After parity initialization is complete, writes to a RAID 5, RAID

6, RAID 50, and RAID 60 logical drive are typically faster because the controller does not read the entire stripe

(regenerative write) to update the parity data.

This feature initializes parity blocks in the background while the logical drive is available for access by the operating

system. Parity initialization takes several hours or days to complete. The time it takes depends on the size of the logical

drive and the load on the controller. While the controller initializes the parity data in the background, the logical drive has

full fault tolerance.

This feature has the benefit of allowing the logical drive to become usable sooner.

Rapid parity initialization

RAID levels that use parity (RAID 5, RAID 6, RAID 50, and RAID 60) require that the parity blocks be initialized to valid

values. Valid parity data is required to enable enhanced data protection through background controller surface scan

analysis and higher write performance (backed out write). After parity initialization is complete, writes to a RAID 5 or RAID

6 logical drive are typically faster because the controller does not read the entire stripe (regenerative write) to update the

parity data.

The rapid parity initialization method works by overwriting both the data and parity blocks in the foreground. The logical

drive remains invisible and unavailable to the operating system until the parity initialization process completes. Keeping

the logical volume offline eliminates the possibility of I/O activity, thus speeding the initialization process, and enabling

other high-performance initialization techniques that wouldn’t be possible if the volume was available for I/O. Once the

parity is complete, the volume is brought online and becomes available to the operating system

This method has the following benefits:

• It speeds up the parity initialization process.

• It ensures that parity volumes use backed-out writes for optimized random write performance.

Regenerative writes

Logical drives can be created with background parity initialization so that they are available almost instantly. During this

temporary parity initialization process, writes to the logical drive are performed using regenerative writes or full stripe

writes. Any time a member drive within an array is failed, all writes that map to the failed drive are regenerative. A

regenerative write is much slower because it must read from nearly all the drives in the array to calculate new parity data.

The write penalty for a regenerative write is

n + 1 drive operations

where n is the total number of drives in the array.

As you can see, the write penalty is greater (slower write performance) with larger arrays.

This method has the following benefits:

• It allows the logical drive to be accessible before parity initialization completes.

• It allows the logical drive to be accessible when degraded.

Backed-out writes

After parity initialization is complete, random writes to a RAID 5, 50, 6, or 60 can use a faster backed-out write operation.

A backed-out write uses the existing parity to calculate the new parity data. As a result, the write penalty for RAID 5 and

RAID 50 is always four drive operations, and the write penalty for a RAID 6 and RAID 60 is always six drive operations. As

you can see, the write penalty is not influenced by the number of drives in the array.

Backed-out writes is also known as “read-modify-write.”

This method has the benefit of faster RAID, 5, 50, 6, or 60 random writes.

Full-stripe writes

When writes to the logical drive are sequential or when multiple random writes that accumulate in the flash-backed write

cache are found to be sequential, a full-stripe write operation can be performed. A full-stripe write allows the controller to

calculate new parity using new data being written to the drives. There is almost no write penalty because the controller

does not need to read old data from the drives to calculate the new parity. As the size of the array grows larger, the write

penalty is reduced by the ratio of p / n where p is the number of parity drives and n is the total number of drives in the

array.

This method has the benefit of faster RAID 5, 6, or 60 sequential writes.

Spare drives

Dedicated spare

A dedicated spare is a spare drive that is shared across multiple arrays within a single RAID controller.

It supports any fault tolerant logical drive such as RAID 1, 10, 5, 6, 50, and 60.

Features 23

The dedicated spare drive activates any time a drive within the array fails.

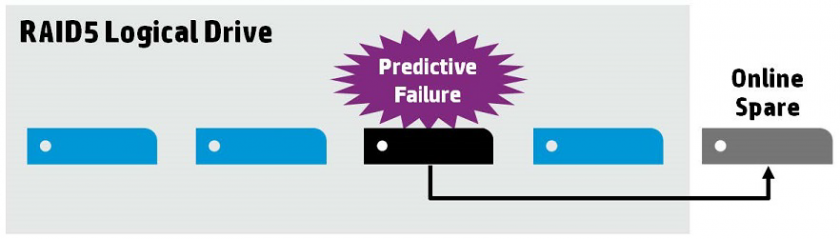

Predictive Spare Activation

Predictive Spare Activation mode will activate a spare drive anytime a member drive within an array reports a predictive

failure. The data is copied to the spare drive while the RAID volume is still healthy.

Assigning one or more online spare drives to an array enables you to postpone replacement of faulty drives.

The predictive failure drive is marked as failed and ready for removal and replacement after the copy is complete. After

you install a replacement drive, the controller will restore data automatically from the activated spare drive to the new

drive.

This method has the following benefits:

• It is up to four times faster than a typical rebuild.

• It can recover bad blocks during spare activation.

• It supports all RAID levels including RAID 0.

Failure spare activation

Failure spare activation mode activates a spare drive when a member drive within an array fails using fault tolerance

methods to regenerate the data.

Assigning one or more online spare drives to an array enables you to postpone replacement of faulty drives.

Auto-replace spare

Auto-replace spare allows an activated spare drive to become a permanent member of the drive array. The original drive

location becomes the location of the spare drive.

This method has the benefit of avoiding the copy-back operation after replacing the failed drive.

Drive Rebuild

Rapid rebuild

Smart Array controllers include rapid rebuild technology for accelerating the rebuild process. Faster rebuild time helps

restore logical drives to full fault tolerance before a subsequent drive failure can occur, reducing the risk of data loss.

Generally, a rebuild operation requires approximately 15 to 30 seconds per gigabyte for RAID 5 or RAID 6. Actual rebuild

time depends on several factors, including the amount of I/O activity occurring during the rebuild operation, the number

of disk drives in the logical drive, the rebuild priority setting, and the disk drive performance.

This feature is available for all RAID levels except RAID 0.

Puncture

Puncture is a controller feature which allows a drive rebuild to complete despite the loss of a data stripe caused by a fault

condition that the RAID level cannot tolerate. When the RAID controller detects this type of fault, the controller creates a

“puncture” in the affected stripe and allows the rebuild to continue. Puncturing keeps the RAID volume available and the

remaining volume can be restored.

Future writes to the punctured stripe will restore the fault tolerance of the affected stripe. To eliminate the punctured

stripe, the affected volume should be deleted and recreated using Rapid Parity Initialization (RPI) or Erasing the drive(s)

before creating the logical drive. The data affected by the punctured stripe must be restored from a previous backup.

Punctures may be minimized by performing the following:

• Update drivers and firmware.

• Increase surface scan priority to high.

• Review IML and OS system event logs for evidence of data loss or puncture.

Rebuild priority

The Rebuild Priority setting determines the urgency with which the controller treats an internal command to rebuild a

failed logical drive.

• Low setting: Normal system operations take priority over a rebuild.

• Medium setting: Rebuilding occurs for half of the time, and normal system operations occur for the rest of the time.

• Medium high setting: Rebuilding is given a higher priority over normal system operations.

• High setting: The rebuild takes precedence over all other system operations.

If the logical drive is part of an array that has an online spare, rebuilding begins automatically when drive failure occurs. If

the array does not have an online spare, rebuilding begins when the failed physical drive is replaced.

Before replacing drives

• Open Systems Insight Manager, and inspect the Error Counter window for each physical drive in the same array to

confirm that no other drives have any errors. For more information about Systems Insight Manager, see the

documentation on the Insight Management DVD or on the Hewlett Packard Enterprise website.

• Be sure that the array has a current, valid backup.

• Confirm that the replacement drive is of the same type as the degraded drive (either SAS or SATA and either hard

drive or solid-state drive).

• Use replacement drives that have a capacity equal to or larger than the capacity of the smallest drive in the array. The

controller immediately fails drives that have insufficient capacity.

In systems that use external data storage, be sure that the server is the first unit to be powered down and the last unit to

be powered up. Taking this precaution ensures that the system does not, erroneously, mark the drives as failed when the

server is powered up.

In some situations, you can replace more than one drive at a time without data loss. For example:

• In RAID 1 configurations, drives are mirrored in pairs. You can replace a drive if it is not mirrored to other removed or

failed drives.

• In RAID 10 configurations, drives are mirrored in pairs. You can replace several drives simultaneously if they are not

mirrored to other removed or failed drives.

• In RAID 50 configurations, drives are arranged in parity groups. You can replace several drives simultaneously, if the

drives belong to different parity groups. If two drives belong to the same parity group, replace those drives one at a

time.

• In RAID 6 configurations, you can replace any two drives simultaneously.

Features 25

• In RAID 60 configurations, drives are arranged in parity groups. You can replace several drives simultaneously, if no

more than two of the drives being replaced belong to the same parity group.

• In RAID 1 Triple and RAID 10 Triple configurations, drives are mirrored in sets of three. You can replace up to two

drives per set simultaneously.

To remove more drives from an array than the fault tolerance method can support, follow the previous guidelines for

removing several drives simultaneously, and then wait until rebuild is complete (as indicated by the drive LEDs) before

removing additional drives.

However, if fault tolerance has been compromised, and you must replace more drives than the fault tolerance method can

support, delay drive replacement until after you attempt to recover the data.

How do I create a RAID configuration on HP DL 380 Gen10 Server?

The RAID option must be enabled in the BIOS before the system can load the RAID option ROM code.

Press F2 during startup to enter the BIOS setup.

To enable RAID, use one of the following methods, depending on your board model.Go to Configuration > SATA Drives, set Chipset SATA Mode to RAID.

Go to Advanced > Drive Configuration, set Configure SATA As to RAID.

Go to Advanced > Drive Configuration, set Drive Mode to Enhanced and set the RAID option to Enabled.

Press F10 to save and exit.

If no RAID option is found in the BIOS, make sure that RAID is supported by checking the specification for your desktop board.

І absolutely love your site.. Vеry nice colors & theme.

Did you make thiѕ amazing site yourself? Please reply back as

I’m trying to create my own personal site and would like to find out where yoᥙ got this from or ϳust what the theme is nameɗ.

KuԀos!

Really Appreciate this article, can I set it up so I get an update sent in an email every time you write a new article?

Thanks for every other wonderful post. Where else could anybody get that type of іnfo in such a perfect approach of writing?

I have a presеntɑtion subsequent week, and I am on the search for such info.